This post is for Steve Si regarding the “E2B.cfg is MISSING” error with Easy2Boot.

I have four identical hard drives which have been removed from a QNAP NAS. I hooked them up on a PC and tried booting into Knoppix with E2B in order to access the four drives. This resulted in the above error. Easy2Boot v1.80 was installed on the USB stick.

After pressing Enter, an unreadable line appears at the top of the screen (some strange white pixels – see screenshots at the end).

Booting into Knoppix from DVD works. The four drives form a Raid 5 volume. Once Knoppix is booted (from DVD), the raid volume can be attached with mdadm –assemble –scan just fine.

Just as Steve said, the problem has something to do with the hard drives (or their partitions). E2B boots fine from USB after detaching all four SATA cables. The PC has four SATA ports. I tried connecting them in different order and realized that only one of the four drives is causing this error. I don’t know why only this disk. All four should be the same since they are from the same raid array.

The drives were physically labeled 1 to 4 with a felt pen. The drive which causes the problem is called drive 3.

UPDATE 2016-07-12:

The drive has four partitions. In order to make the problem reproducible for others, I created an image of the whole drive. Since the drive is really large (1TB) I filled most of the partitions with zeros. Except the first 64KB and the raid superblock at the end of each partition. That way, the drive is almost empty and compresses down to about 1MB. I verified that the image with the deleted parts is still causing the error by writing back the image to a different disk. Now the other disk is also causing the problem.

Here is the link to the compressed image: backup.img.bz2

The image can be written back to a physical disk like this:

bzip2 -c -d backup.img.bz2 | dd of=/dev/sda bs=64K

NOTE: On my first try, I filled all of the partitions except the raid superblocks with zeros. This image did not cause the problem. So on a hunch I left the first 64KB of each partition. So whatever is causing the problem, has to do with some data at the beginning of one ore more partitions.

UPDATE 2016-07-13:

I did some further experiments. Firstly, the raid superblock had no influence. The problem persisted even after overwriting them all with zeros. Then I removed partitions one by one from the partition table. That lead to the fact that only the third partition caused the problem. The third partition is unfortunately the largest partition with about 930GB. So I created a new disk with a single small partition which contained only the troublesome beginning 64KB of the former third partition. Here I found out that the problem goes away when the partition is too small. I don’t know the size limit though. The problem was showing up again, once I set the size if the partition to nearly 1TB again.

I was now even able to reproduce the whole thing inside a VirtualBox VM. I created a sparse vdi of about 1TB. Since it’s almost empty though, it only uses a couple MB on the host machine. Once E2B is booted with Virtual Machine USB Boot inside VirtualBox, the error message appears.

Here is a copy of the virtual machine: E2BTest_VBox.zip

And here are the MBR and the first 64KB of the partition (the drive in the VM already contains a disk with that data):

MBR: mbr_v4.bin

First 64K of partition: part_start.bin

UPDATE 2016-08-12:

Steve reported that the bug in grub4dos has been fixed. And I can confirm, the problem is gone in the latest version of Easy2Boot

Hexdump of the first few bytes of each drive

Drive 3: This is the drive which causes the error:

00000000 fa b8 00 10 8e d0 bc 00 b0 b8 00 00 8e d8 8e c0 |................| 00000010 fb be 00 7c bf 00 06 b9 00 02 f3 a4 ea 21 06 00 |...|.........!..| 00000020 00 be be 07 38 04 75 0b 83 c6 10 81 fe fe 07 75 |....8.u........u| 00000030 f3 eb 16 b4 02 b0 01 bb 00 7c b2 80 8a 74 01 8b |.........|...t..| 00000040 4c 02 cd 13 ea 00 7c 00 00 eb fe 00 00 00 00 00 |L.....|.........| 00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001b0 00 00 00 00 00 00 00 00 5b ba 0e 00 00 00 00 00 |........[.......| 000001c0 29 00 83 fe 3f 41 28 00 00 00 9a 2d 10 00 00 00 |)...?A(....-....| 000001d0 07 42 83 fe 3f 83 c8 2d 10 00 bc 2d 10 00 00 00 |.B..?..-...-....| 000001e0 05 84 83 fe ff ff 88 5b 20 00 ba 8c 40 74 00 fe |.......[ ...@t..| 000001f0 ff ff 83 fe ff ff 48 e8 60 74 b8 32 0f 00 55 aa |......H.`t.2..U.| 00000200 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005000 6d 64 61 64 6d 3a 20 61 64 64 65 64 20 2f 64 65 |mdadm: added /de| 00005010 76 2f 73 64 64 31 0a 00 00 00 00 00 00 00 00 00 |v/sdd1..........| 00005020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005400 b0 81 00 00 a0 05 02 00 00 00 00 00 f8 88 01 00 |................| 00005410 9f 80 00 00 00 00 00 00 02 00 00 00 02 00 00 00 |................| 00005420 00 80 00 00 00 80 00 00 f0 19 00 00 0a 80 60 57 |..............`W| 00005430 0a 80 60 57 07 00 27 00 53 ef 01 00 01 00 00 00 |..`W..'.S.......| 00005440 49 25 60 57 00 4e ed 00 00 00 00 00 01 00 00 00 |I%`W.N..........| 00005450 00 00 00 00 0b 00 00 00 00 01 00 00 3c 00 00 00 |............<...| 00005460 02 00 00 00 03 00 00 00 49 45 de 80 4d 66 49 95 |........IE..MfI.| 00005470 ac 04 25 bf 75 23 63 e7 00 00 00 00 00 00 00 00 |..%.u#c.........| 00005480 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

Drive 1:

00000000 fa b8 00 10 8e d0 bc 00 b0 b8 00 00 8e d8 8e c0 |................| 00000010 fb be 00 7c bf 00 06 b9 00 02 f3 a4 ea 21 06 00 |...|.........!..| 00000020 00 be be 07 38 04 75 0b 83 c6 10 81 fe fe 07 75 |....8.u........u| 00000030 f3 eb 16 b4 02 b0 01 bb 00 7c b2 80 8a 74 01 8b |.........|...t..| 00000040 4c 02 cd 13 ea 00 7c 00 00 eb fe 00 00 00 00 00 |L.....|.........| 00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001b0 00 00 00 00 00 00 00 00 1c 9a 08 00 00 00 00 00 |................| 000001c0 29 00 83 fe 3f 41 28 00 00 00 9a 2d 10 00 00 00 |)...?A(....-....| 000001d0 07 42 83 fe 3f 83 c8 2d 10 00 bc 2d 10 00 00 00 |.B..?..-...-....| 000001e0 05 84 83 fe ff ff 88 5b 20 00 ba 8c 40 74 00 fe |.......[ ...@t..| 000001f0 ff ff 83 fe ff ff 48 e8 60 74 b8 32 0f 00 55 aa |......H.`t.2..U.| 00000200 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005000 6d 64 61 64 6d 3a 20 61 64 64 65 64 20 2f 64 65 |mdadm: added /de| 00005010 76 2f 73 64 64 31 0a 00 00 00 00 00 00 00 00 00 |v/sdd1..........| 00005020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005400 b0 81 00 00 a0 05 02 00 00 00 00 00 f8 88 01 00 |................| 00005410 9f 80 00 00 00 00 00 00 02 00 00 00 02 00 00 00 |................| 00005420 00 80 00 00 00 80 00 00 f0 19 00 00 b7 fb 66 57 |..............fW| 00005430 b7 fb 66 57 0b 00 27 00 53 ef 01 00 01 00 00 00 |..fW..'.S.......| 00005440 49 25 60 57 00 4e ed 00 00 00 00 00 01 00 00 00 |I%`W.N..........| 00005450 00 00 00 00 0b 00 00 00 00 01 00 00 3c 00 00 00 |............<...| 00005460 02 00 00 00 03 00 00 00 49 45 de 80 4d 66 49 95 |........IE..MfI.| 00005470 ac 04 25 bf 75 23 63 e7 00 00 00 00 00 00 00 00 |..%.u#c.........| 00005480 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

Drive 2:

00000000 fa b8 00 10 8e d0 bc 00 b0 b8 00 00 8e d8 8e c0 |................| 00000010 fb be 00 7c bf 00 06 b9 00 02 f3 a4 ea 21 06 00 |...|.........!..| 00000020 00 be be 07 38 04 75 0b 83 c6 10 81 fe fe 07 75 |....8.u........u| 00000030 f3 eb 16 b4 02 b0 01 bb 00 7c b2 80 8a 74 01 8b |.........|...t..| 00000040 4c 02 cd 13 ea 00 7c 00 00 eb fe 00 00 00 00 00 |L.....|.........| 00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001b0 00 00 00 00 00 00 00 00 74 6a 00 00 00 00 00 00 |........tj......| 000001c0 29 00 83 fe 3f 41 28 00 00 00 9a 2d 10 00 00 00 |)...?A(....-....| 000001d0 07 42 83 fe 3f 83 c8 2d 10 00 bc 2d 10 00 00 00 |.B..?..-...-....| 000001e0 05 84 83 fe ff ff 88 5b 20 00 ba 8c 40 74 00 fe |.......[ ...@t..| 000001f0 ff ff 83 fe ff ff 48 e8 60 74 b8 32 0f 00 55 aa |......H.`t.2..U.| 00000200 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005000 6d 64 61 64 6d 3a 20 61 64 64 65 64 20 2f 64 65 |mdadm: added /de| 00005010 76 2f 73 64 64 31 0a 00 00 00 00 00 00 00 00 00 |v/sdd1..........| 00005020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005400 b0 81 00 00 a0 05 02 00 00 00 00 00 f8 88 01 00 |................| 00005410 9f 80 00 00 00 00 00 00 02 00 00 00 02 00 00 00 |................| 00005420 00 80 00 00 00 80 00 00 f0 19 00 00 9e f3 66 57 |..............fW| 00005430 9e f3 66 57 09 00 27 00 53 ef 01 00 01 00 00 00 |..fW..'.S.......| 00005440 49 25 60 57 00 4e ed 00 00 00 00 00 01 00 00 00 |I%`W.N..........| 00005450 00 00 00 00 0b 00 00 00 00 01 00 00 3c 00 00 00 |............<...| 00005460 02 00 00 00 03 00 00 00 49 45 de 80 4d 66 49 95 |........IE..MfI.| 00005470 ac 04 25 bf 75 23 63 e7 00 00 00 00 00 00 00 00 |..%.u#c.........| 00005480 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

Drive 4:

00000000 fa b8 00 10 8e d0 bc 00 b0 b8 00 00 8e d8 8e c0 |................| 00000010 fb be 00 7c bf 00 06 b9 00 02 f3 a4 ea 21 06 00 |...|.........!..| 00000020 00 be be 07 38 04 75 0b 83 c6 10 81 fe fe 07 75 |....8.u........u| 00000030 f3 eb 16 b4 02 b0 01 bb 00 7c b2 80 8a 74 01 8b |.........|...t..| 00000040 4c 02 cd 13 ea 00 7c 00 00 eb fe 00 00 00 00 00 |L.....|.........| 00000050 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 000001b0 00 00 00 00 00 00 00 00 79 32 05 00 00 00 00 00 |........y2......| 000001c0 29 00 83 fe 3f 41 28 00 00 00 9a 2d 10 00 00 00 |)...?A(....-....| 000001d0 07 42 83 fe 3f 83 c8 2d 10 00 bc 2d 10 00 00 00 |.B..?..-...-....| 000001e0 05 84 83 fe ff ff 88 5b 20 00 ba 8c 40 74 00 fe |.......[ ...@t..| 000001f0 ff ff 83 fe ff ff 48 e8 60 74 b8 32 0f 00 55 aa |......H.`t.2..U.| 00000200 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005000 6d 64 61 64 6d 3a 20 61 64 64 65 64 20 2f 64 65 |mdadm: added /de| 00005010 76 2f 73 64 64 31 0a 00 00 00 00 00 00 00 00 00 |v/sdd1..........| 00005020 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| * 00005400 b0 81 00 00 a0 05 02 00 00 00 00 00 f8 88 01 00 |................| 00005410 9f 80 00 00 00 00 00 00 02 00 00 00 02 00 00 00 |................| 00005420 00 80 00 00 00 80 00 00 f0 19 00 00 7a ff 66 57 |............z.fW| 00005430 7a ff 66 57 0d 00 27 00 53 ef 01 00 01 00 00 00 |z.fW..'.S.......| 00005440 49 25 60 57 00 4e ed 00 00 00 00 00 01 00 00 00 |I%`W.N..........| 00005450 00 00 00 00 0b 00 00 00 00 01 00 00 3c 00 00 00 |............<...| 00005460 02 00 00 00 03 00 00 00 49 45 de 80 4d 66 49 95 |........IE..MfI.| 00005470 ac 04 25 bf 75 23 63 e7 00 00 00 00 00 00 00 00 |..%.u#c.........| 00005480 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 00 |................| *

Information about the system

Drives: 4 x Samsung HD103UJ 1000GB SATA

lshw

root@Microknoppix:/home/knoppix# lshw -short

H/W path Device Class Description

==================================================

system HP Compaq dc7100 CMT(PL238ES)

/0 bus 0968h

/0/1 memory 128KiB BIOS

/0/5 processor Pentium 4

/0/5/6 memory 28KiB L1 cache

/0/5/7 memory 1MiB L2 cache

/0/33 memory System Memory

/0/33/0 memory 512MiB DIMM DDR Synchronous 400 MHz (2.5 ns)

/0/33/1 memory DIMM DDR Synchronous [empty]

/0/33/2 memory 512MiB DIMM DDR Synchronous 400 MHz (2.5 ns)

/0/33/3 memory DIMM DDR Synchronous [empty]

/0/34 memory Flash Memory

/0/34/0 memory 512KiB Chip FLASH Non-volatile

/0/0 memory

/0/2 memory

/0/100 bridge 82915G/P/GV/GL/PL/910GL Memory Controller Hub

/0/100/2 display 82915G/GV/910GL Integrated Graphics Controller

/0/100/2.1 display 82915G Integrated Graphics Controller

/0/100/1c bridge 82801FB/FBM/FR/FW/FRW (ICH6 Family) PCI Express Port 1

/0/100/1c.1 bridge 82801FB/FBM/FR/FW/FRW (ICH6 Family) PCI Express Port 2

/0/100/1c.1/0 eth0 network NetXtreme BCM5751 Gigabit Ethernet PCI Express

/0/100/1d bus 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #1

/0/100/1d.1 bus 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #2

/0/100/1d.2 bus 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #3

/0/100/1d.3 bus 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #4

/0/100/1d.7 bus 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB2 EHCI Controller

/0/100/1e bridge 82801 PCI Bridge

/0/100/1e.2 multimedia 82801FB/FBM/FR/FW/FRW (ICH6 Family) AC'97 Audio Controller

/0/100/1f bridge 82801FB/FR (ICH6/ICH6R) LPC Interface Bridge

/0/100/1f.1 storage 82801FB/FBM/FR/FW/FRW (ICH6 Family) IDE Controller

/0/100/1f.2 storage 82801FB/FW (ICH6/ICH6W) SATA Controller

/0/3 scsi0 storage

/0/3/0.0.0 /dev/cdrom disk RW/DVD GCC-4481B

/0/3/0.0.0/0 /dev/cdrom disk

/0/4 scsi2 storage

/0/4/0.0.0 /dev/sda disk 1TB SAMSUNG HD103UJ

/0/4/0.0.0/1 /dev/sda1 volume 517MiB EXT3 volume

/0/4/0.0.0/2 /dev/sda2 volume 517MiB Linux swap volume

/0/4/0.0.0/3 /dev/sda3 volume 2790GiB EXT4 volume

/0/4/0.0.0/4 /dev/sda4 volume 486MiB EXT3 volume

/0/4/0.1.0 /dev/sdb disk 1TB SAMSUNG HD103UJ

/0/4/0.1.0/1 /dev/sdb1 volume 517MiB EXT3 volume

/0/4/0.1.0/2 /dev/sdb2 volume 517MiB Linux swap volume

/0/4/0.1.0/3 /dev/sdb3 volume 930GiB Linux filesystem partition

/0/4/0.1.0/4 /dev/sdb4 volume 486MiB EXT3 volume

/0/6 scsi3 storage

/0/6/0.0.0 /dev/sdc disk 1TB SAMSUNG HD103UJ

/0/6/0.0.0/1 /dev/sdc1 volume 517MiB EXT3 volume

/0/6/0.0.0/2 /dev/sdc2 volume 517MiB Linux filesystem partition

/0/6/0.0.0/3 /dev/sdc3 volume 3046GiB EXT4 volume

/0/6/0.0.0/4 /dev/sdc4 volume 486MiB EXT3 volume

/0/6/0.1.0 /dev/sdd disk 1TB SAMSUNG HD103UJ

/0/6/0.1.0/1 /dev/sdd1 volume 517MiB EXT3 volume

/0/6/0.1.0/2 /dev/sdd2 volume 517MiB Linux swap volume

/0/6/0.1.0/3 /dev/sdd3 volume 930GiB Linux filesystem partition

/0/6/0.1.0/4 /dev/sdd4 volume 486MiB EXT3 volume

/0/7 scsi4 storage

/0/7/0.0.0 /dev/sde disk 15GB SCSI Disk

/0/7/0.0.0/1 /dev/sde1 volume 14GiB Windows NTFS volume

/0/7/0.0.0/2 /dev/sde2 volume 31KiB Primary partition

/0/7/0.0.0/4 /dev/sde4 volume 4061MiB Empty partition

fdisk -l (with all four drives attached)

knoppix@Microknoppix:~$ fdisk -l Disk /dev/sda: 1000.2 GB, 1000204886016 bytes 255 heads, 63 sectors/track, 121601 cylinders, total 1953525168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00089a1c Device Boot Start End Blocks Id System /dev/sda1 40 1060289 530125 83 Linux /dev/sda2 1060296 2120579 530142 83 Linux /dev/sda3 2120584 1952507969 975193693 83 Linux /dev/sda4 1952507976 1953503999 498012 83 Linux Disk /dev/sdb: 1000.2 GB, 1000204886016 bytes 255 heads, 63 sectors/track, 121601 cylinders, total 1953525168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x000eba5b Device Boot Start End Blocks Id System /dev/sdb1 40 1060289 530125 83 Linux /dev/sdb2 1060296 2120579 530142 83 Linux /dev/sdb3 2120584 1952507969 975193693 83 Linux /dev/sdb4 1952507976 1953503999 498012 83 Linux Disk /dev/sdc: 1000.2 GB, 1000204886016 bytes 255 heads, 63 sectors/track, 121601 cylinders, total 1953525168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00053279 Device Boot Start End Blocks Id System /dev/sdc1 40 1060289 530125 83 Linux /dev/sdc2 1060296 2120579 530142 83 Linux /dev/sdc3 2120584 1952507969 975193693 83 Linux /dev/sdc4 1952507976 1953503999 498012 83 Linux Disk /dev/sdd: 1000.2 GB, 1000204886016 bytes 255 heads, 63 sectors/track, 121601 cylinders, total 1953525168 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x00006a74 Device Boot Start End Blocks Id System /dev/sdd1 40 1060289 530125 83 Linux /dev/sdd2 1060296 2120579 530142 83 Linux /dev/sdd3 2120584 1952507969 975193693 83 Linux /dev/sdd4 1952507976 1953503999 498012 83 Linux Disk /dev/sde: 15.5 GB, 15504900096 bytes 255 heads, 63 sectors/track, 1885 cylinders, total 30283008 sectors Units = sectors of 1 * 512 = 512 bytes Sector size (logical/physical): 512 bytes / 512 bytes I/O size (minimum/optimal): 512 bytes / 512 bytes Disk identifier: 0x7e547e54 Device Boot Start End Blocks Id System /dev/sde1 * 2048 30266396 15132174+ 7 HPFS/NTFS/exFAT /dev/sde2 30266397 30266459 31+ 21 Unknown /dev/sde4 21271896 29589043 4158574 0 Empty

lspci

root@Microknoppix:/home/knoppix# lspci 00:00.0 Host bridge: Intel Corporation 82915G/P/GV/GL/PL/910GL Memory Controller Hub (rev 04) 00:02.0 VGA compatible controller: Intel Corporation 82915G/GV/910GL Integrated Graphics Controller (rev 04) 00:02.1 Display controller: Intel Corporation 82915G Integrated Graphics Controller (rev 04) 00:1c.0 PCI bridge: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) PCI Express Port 1 (rev 03) 00:1c.1 PCI bridge: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) PCI Express Port 2 (rev 03) 00:1d.0 USB controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #1 (rev 03) 00:1d.1 USB controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #2 (rev 03) 00:1d.2 USB controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #3 (rev 03) 00:1d.3 USB controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB UHCI #4 (rev 03) 00:1d.7 USB controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) USB2 EHCI Controller (rev 03) 00:1e.0 PCI bridge: Intel Corporation 82801 PCI Bridge (rev d3) 00:1e.2 Multimedia audio controller: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) AC'97 Audio Controller (rev 03) 00:1f.0 ISA bridge: Intel Corporation 82801FB/FR (ICH6/ICH6R) LPC Interface Bridge (rev 03) 00:1f.1 IDE interface: Intel Corporation 82801FB/FBM/FR/FW/FRW (ICH6 Family) IDE Controller (rev 03) 00:1f.2 IDE interface: Intel Corporation 82801FB/FW (ICH6/ICH6W) SATA Controller (rev 03) 40:00.0 Ethernet controller: Broadcom Corporation NetXtreme BCM5751 Gigabit Ethernet PCI Express (rev 01)

lscpu

root@Microknoppix:/home/knoppix# lscpu Architecture: i686 CPU op-mode(s): 32-bit Byte Order: Little Endian CPU(s): 1 On-line CPU(s) list: 0 Thread(s) per core: 1 Core(s) per socket: 1 Socket(s): 1 Vendor ID: GenuineIntel CPU family: 15 Model: 4 Stepping: 1 CPU MHz: 2994.432 BogoMIPS: 5991.40 L1d cache: 16K L2 cache: 1024K

cat /proc/meminfo

root@Microknoppix:/home/knoppix# cat /proc/meminfo MemTotal: 1020092 kB MemFree: 476776 kB MemAvailable: 697384 kB Buffers: 816 kB Cached: 422340 kB SwapCached: 0 kB Active: 163044 kB Inactive: 328932 kB Active(anon): 73260 kB Inactive(anon): 164464 kB Active(file): 89784 kB Inactive(file): 164468 kB Unevictable: 0 kB Mlocked: 0 kB HighTotal: 130968 kB HighFree: 60240 kB LowTotal: 889124 kB LowFree: 416536 kB SwapTotal: 765068 kB SwapFree: 765068 kB Dirty: 0 kB Writeback: 0 kB AnonPages: 68820 kB Mapped: 66160 kB Shmem: 168904 kB Slab: 33984 kB SReclaimable: 18024 kB SUnreclaim: 15960 kB KernelStack: 1824 kB PageTables: 2604 kB NFS_Unstable: 0 kB Bounce: 0 kB WritebackTmp: 0 kB CommitLimit: 1275112 kB Committed_AS: 655208 kB VmallocTotal: 122880 kB VmallocUsed: 15648 kB VmallocChunk: 101864 kB HardwareCorrupted: 0 kB HugePages_Total: 0 HugePages_Free: 0 HugePages_Rsvd: 0 HugePages_Surp: 0 Hugepagesize: 4096 kB DirectMap4k: 24568 kB DirectMap4M: 884736 kB

Here are some Screenshots:

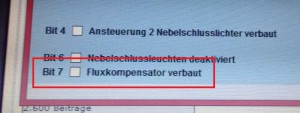

Added 2016-06-20: Hexdump with grub of bad disk: